One of Us

You must not lose faith in humanity. Humanity is an ocean; if a few drops of the ocean are dirty, the ocean does not become dirty.

-Mahatma Gandhi

People follow patterns. Watching The Tinder Swindler reminded me how predictably irrational human behavior can be. We’re all guilty of it. How we react to external factors can be programmatic at times. The beauty of humans is that we’re adaptable. Those who were swindled found out and fought back. They changed their patterns. Humans adapt faster, and are more self aware than any other living organism we know. However, there are many types of intelligence.

Humans are awful at certain things, e.g. humans can’t communicate well. Humans see using their visual light receptors and speak using their vocal cords. Try to describe your current physical surroundings to a friend. Could your friend draw it or come close to imagining every detail of the exact space without having seen your surroundings themselves? It would take ages to describe every detail of even the most basic surroundings.

Imagine if we could communicate in the same sense we see in. Say we saw in sonar and communicated in sonar. We could send the exact images we see. This is how dolphins communicate. They send the projection of what they see. They’re able to do it because they see and speak in the same sense. Added bonus– their sonar sees ~10 ft through objects. When you look at a person's arm, it’d be like seeing their skin, muscles, bone, and bone marrow all the way through their body. Dolphins see more 3D than we do.

Humans have internal feelings and conscious thoughts. We can’t communicate those feelings and thoughts without words. This is an awfully inefficient way to communicate. If we could send our thoughts and emotions, we'd better understand one another. We’d faster understand each others’ true selves. Instead, we only get a glimpse of each other via our senses.

These forms of communication are a form of intelligence. It’s knowledge transmission. Being able to articulate and transmit knowledge is extraordinarily valuable, but it’s not what most would consider raw intelligence. General intelligence is how well one can solve a solution to an unfamiliar task.

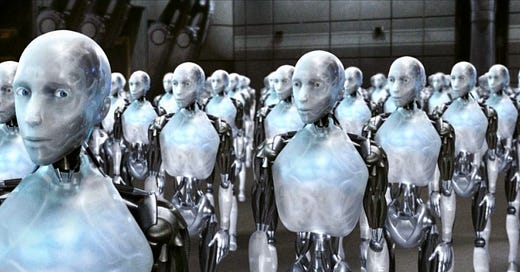

AGI (Artificial General Intelligence)

Within our lifetime, we’ll likely get to the point where the general prediction algorithms and utility functions of AGI outpace humanity. The AGI will be able to constantly alter their prediction algorithms and utility functions. We already see this in computer games, e.g. humans study the AI AlphaZero to learn to play chess better. The AI is better at chess than any human ever was or ever will be. Imagine a future AGI trying to be the best Jew, the AGI would be studied by rabbis to learn how to be more moral and compliant with the Torah. When an AGI is trained to be the best programmer, it will be studied by hobbyist programmers to learn how to improve their coding skills.

If someone deletes an AGI that is sentient and peaceful, we could say it’s murder. If someone deletes lines of code of a sentient AGI, it is inflicting harm upon the AGI. Harm that may not be able to be repaired. So if it’s immoral to update lines of code of a sentient AGI, we want to know when an AGI is sentient. If we don’t, then each code update could be killing a sentient being. This reminds me a lot of the abortion debate– drawing an arbitrary line feels crazy. Is it at inception when a fetus becomes a human or is it at 14 weeks or is it not human until it’s birthed? What about the first six months post birth? It’s arbitrary but we have to draw a line somewhere. More accurate would be to think of life starting at certain stages of cognitive development and whether it passes the test vs a specific age. We still need to choose. For an AGI, is it when the AGI passes the Turing Test or when it becomes self aware or when it can come to successful conclusions on a wide variety of topics with the same data as a human? The lines are super fuzzy and tests would need to be given. No test would be perfect, but a test is better than no test.

When we create an AGI, we’re essentially creating an alien. There are different types of aliens.

Aliens

It’s important to abstract here to think on a species-wide scale. There will be corner cases like a human who’s a vegetable who can’t speak, can’t think, and can’t talk. We still consider them human. If only because of their former humanity. If a species is as useless as a vegetable human, they don’t get human rights. We need to abstract to think of entire species.

In the Enderverse series, there’s a Hierarchy of Foreignness that determines how a species of aliens should be treated. If an alien species is “Ramen”, then we can meaningfully communicate with them, and we can understand each other's actions enough to live in peace. “Ramen” are sentient and peaceful and so should be given the same natural rights as humans. “Ramen” and humanity can co-exist. On the other hand, “Varelse” may or may not be sentient, and we cannot meaningfully communicate with them. We need to try every form of communication before designating a species “Varelse”. “Varelse” should not be given the same natural rights as humans.

Time needs to be taken to determine whether an alien species is “Ramen” or “Varelse”. One cannot live in peace with “Varelse”. If the “Varelse” species is actively harmful, then morally, it can be exterminated. “Varelse” are not entitled to being given the same rights as humans as they have not proven themselves to be sentient.

Whether it be an AGI, carbon based life form or xyz based life form, on a species wide scale, the hierarchy of foreignness still applies. Our framework for how to treat sentient species should be the same whether they’re alien or AGI. “Ramen” are one of us.